#html data attribute tutorial

Explore tagged Tumblr posts

Text

Web Scraping 101: Everything You Need to Know in 2025

🕸️ What Is Web Scraping? An Introduction

Web scraping—also referred to as web data extraction—is the process of collecting structured information from websites using automated scripts or tools. Initially driven by simple scripts, it has now evolved into a core component of modern data strategies for competitive research, price monitoring, SEO, market intelligence, and more.

If you’re wondering “What is the introduction of web scraping?” — it’s this: the ability to turn unstructured web content into organized datasets businesses can use to make smarter, faster decisions.

💡 What Is Web Scraping Used For?

Businesses and developers alike use web scraping to:

Monitor competitors’ pricing and SEO rankings

Extract leads from directories or online marketplaces

Track product listings, reviews, and inventory

Aggregate news, blogs, and social content for trend analysis

Fuel AI models with large datasets from the open web

Whether it’s web scraping using Python, browser-based tools, or cloud APIs, the use cases are growing fast across marketing, research, and automation.

🔍 Examples of Web Scraping in Action

What is an example of web scraping?

A real estate firm scrapes listing data (price, location, features) from property websites to build a market dashboard.

An eCommerce brand scrapes competitor prices daily to adjust its own pricing in real time.

A SaaS company uses BeautifulSoup in Python to extract product reviews and social proof for sentiment analysis.

For many, web scraping is the first step in automating decision-making and building data pipelines for BI platforms.

⚖️ Is Web Scraping Legal?

Yes—if done ethically and responsibly. While scraping public data is legal in many jurisdictions, scraping private, gated, or copyrighted content can lead to violations.

To stay compliant:

Respect robots.txt rules

Avoid scraping personal or sensitive data

Prefer API access where possible

Follow website terms of service

If you’re wondering “Is web scraping legal?”—the answer lies in how you scrape and what you scrape.

🧠 Web Scraping with Python: Tools & Libraries

What is web scraping in Python? Python is the most popular language for scraping because of its ease of use and strong ecosystem.

Popular Python libraries for web scraping include:

BeautifulSoup – simple and effective for HTML parsing

Requests – handles HTTP requests

Selenium – ideal for dynamic JavaScript-heavy pages

Scrapy – robust framework for large-scale scraping projects

Puppeteer (via Node.js) – for advanced browser emulation

These tools are often used in tutorials like “Web scraping using Python BeautifulSoup” or “Python web scraping library for beginners.”

⚙️ DIY vs. Managed Web Scraping

You can choose between:

DIY scraping: Full control, requires dev resources

Managed scraping: Outsourced to experts, ideal for scale or non-technical teams

Use managed scraping services for large-scale needs, or build Python-based scrapers for targeted projects using frameworks and libraries mentioned above.

🚧 Challenges in Web Scraping (and How to Overcome Them)

Modern websites often include:

JavaScript rendering

CAPTCHA protection

Rate limiting and dynamic loading

To solve this:

Use rotating proxies

Implement headless browsers like Selenium

Leverage AI-powered scraping for content variation and structure detection

Deploy scrapers on cloud platforms using containers (e.g., Docker + AWS)

🔐 Ethical and Legal Best Practices

Scraping must balance business innovation with user privacy and legal integrity. Ethical scraping includes:

Minimal server load

Clear attribution

Honoring opt-out mechanisms

This ensures long-term scalability and compliance for enterprise-grade web scraping systems.

🔮 The Future of Web Scraping

As demand for real-time analytics and AI training data grows, scraping is becoming:

Smarter (AI-enhanced)

Faster (real-time extraction)

Scalable (cloud-native deployments)

From developers using BeautifulSoup or Scrapy, to businesses leveraging API-fed dashboards, web scraping is central to turning online information into strategic insights.

📘 Summary: Web Scraping 101 in 2025

Web scraping in 2025 is the automated collection of website data, widely used for SEO monitoring, price tracking, lead generation, and competitive research. It relies on powerful tools like BeautifulSoup, Selenium, and Scrapy, especially within Python environments. While scraping publicly available data is generally legal, it's crucial to follow website terms of service and ethical guidelines to avoid compliance issues. Despite challenges like dynamic content and anti-scraping defenses, the use of AI and cloud-based infrastructure is making web scraping smarter, faster, and more scalable than ever—transforming it into a cornerstone of modern data strategies.

🔗 Want to Build or Scale Your AI-Powered Scraping Strategy?

Whether you're exploring AI-driven tools, training models on web data, or integrating smart automation into your data workflows—AI is transforming how web scraping works at scale.

👉 Find AI Agencies specialized in intelligent web scraping on Catch Experts,

📲 Stay connected for the latest in AI, data automation, and scraping innovation:

💼 LinkedIn

🐦 Twitter

📸 Instagram

👍 Facebook

▶️ YouTube

#web scraping#what is web scraping#web scraping examples#AI-powered scraping#Python web scraping#web scraping tools#BeautifulSoup Python#web scraping using Python#ethical web scraping#web scraping 101#is web scraping legal#web scraping in 2025#web scraping libraries#data scraping for business#automated data extraction#AI and web scraping#cloud scraping solutions#scalable web scraping#managed scraping services#web scraping with AI

0 notes

Text

Advanced HTML Interview Questions and Answers: Key Insights for Experienced Developers

As an experienced developer, mastering HTML is essential to your success in web development. When interviewing for advanced positions, you will encounter advanced HTML interview questions and answers that test not only your understanding of basic HTML elements but also your ability to implement complex concepts and strategies in real-world scenarios. These questions are designed to assess your problem-solving skills and your ability to work with HTML in sophisticated projects.

For seasoned developers, HTML interview questions for experienced developers often go beyond simple tag usage and focus on more intricate concepts, such as semantic HTML, accessibility, and optimization techniques. You might be asked, "What is the difference between block-level and inline elements, and how does this affect page layout?" or "How would you implement responsive web design using HTML?" These questions require an in-depth understanding of HTML’s role within modern web design and the ability to apply it effectively to improve both user experience and page performance.

Experienced developers should also be prepared to answer questions on advanced HTML techniques, such as the use of custom data attributes or the new HTML5 semantic elements like <section>, <article>, and <header>. Interviewers may ask, "How do you use HTML5 APIs in conjunction with other technologies?" or "Can you explain how to create and manage forms with custom validation?" Understanding these more advanced features is crucial for ensuring that your HTML code remains up-to-date and compatible with current web standards and practices.

In summary, advanced HTML interview questions challenge you to demonstrate your expertise in HTML and its application in complex web projects. By preparing for these types of questions, you can showcase your ability to handle sophisticated web development tasks with ease. A strong grasp of HTML’s more advanced features will set you apart from other candidates and increase your chances of securing a role as a senior or lead developer.

At our e-learning tutorial portal, we offer free, easy-to-follow tutorials that teach programming languages, including HTML, through live examples. Our platform is tailored to students who want to learn at their own pace and gain hands-on experience. Whether you are new to programming or an experienced developer looking to refine your skills, our resources will help you prepare for interviews and master the concepts that are critical to success in the tech industry.

0 notes

Text

Learning Selenium: A Comprehensive and Quick Journey for Beginners and Enthusiasts

Selenium is a powerful yet beginner-friendly tool that allows you to automate web browsers for testing, data scraping, or streamlining repetitive tasks. If you want to advance your career at the Selenium Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path. This blog will guide you through a structured, easy-to-follow journey, perfect for beginners and enthusiasts alike.

What Makes Selenium So Popular?

For those looking to excel in Selenium, Selenium Online Course is highly suggested. Look for classes that align with your preferred programming language and learning approach. Selenium is one of the most widely used tools for web automation, and for good reasons:

Open-Source and Free: No licensing costs.

Multi-Language Support: Works with Python, Java, C#, and more.

Browser Compatibility: Supports all major browsers like Chrome, Firefox, and Edge.

Extensive Community: A wealth of resources and forums to help you learn and troubleshoot.

Whether you're a software tester or someone eager to automate browser tasks, Selenium is versatile and accessible.

How Long Does It Take to Learn Selenium?

The time it takes to learn Selenium depends on your starting point:

1. If You’re a Beginner Without Coding Experience

Time Needed: 3–6 weeks

Why? You’ll need to build foundational knowledge in programming (e.g., Python) and basic web development concepts like HTML and CSS.

2. If You Have Basic Coding Skills

Time Needed: 1–2 weeks

Why? You can skip the programming fundamentals and dive straight into Selenium scripting.

3. For Advanced Skills

Time Needed: 6–8 weeks

Why? Mastering advanced topics like handling dynamic content, integrating Selenium with frameworks, or running parallel tests takes more time and practice.

Your Quick and Comprehensive Learning Plan

Here’s a structured roadmap to learning Selenium efficiently:

Step 1: Learn the Basics of a Programming Language

Recommendation: Start with Python because it’s beginner-friendly and well-supported in Selenium.

Key Concepts to Learn:

Variables, loops, and functions.

Handling libraries and modules.

Step 2: Understand Web Development Basics

Familiarize yourself with:

HTML tags and attributes.

CSS selectors and XPath for locating web elements.

Step 3: Install Selenium and Set Up Your Environment

Install Python and the Selenium library.

Download the WebDriver for your preferred browser (e.g., ChromeDriver).

Write and run a basic script to open a browser and navigate to a webpage.

Step 4: Master Web Element Interaction

Learn to identify and interact with web elements using locators like:

ID

Name

CSS Selector

XPath

Practice clicking buttons, filling out forms, and handling dropdown menus.

Step 5: Dive Into Advanced Features

Handle pop-ups, alerts, and multiple browser tabs.

Work with dynamic content and implicit/explicit waits.

Automate repetitive tasks like form submissions or web scraping.

Step 6: Explore Frameworks and Testing Integration

Learn how to use testing frameworks like TestNG (Java) or Pytest (Python) to structure and scale your tests.

Understand how to generate reports and run parallel tests.

Step 7: Build Real-World Projects

Create test scripts for websites you use daily.

Automate login processes, data entry tasks, or form submissions.

Experiment with end-to-end test cases to mimic user actions.

Tips for a Smooth Learning Journey

Start Small: Focus on simple tasks before diving into advanced topics.

Use Resources Wisely: Leverage free tutorials, forums, and YouTube videos. Platforms like Udemy and Coursera offer structured courses.

Practice Consistently: Regular hands-on practice is key to mastering Selenium.

Join the Community: Participate in forums like Stack Overflow or Reddit for help and inspiration.

Experiment with Real Websites: Automate tasks on real websites to gain practical experience.

What Can You Achieve with Selenium?

By the end of your Selenium learning journey, you’ll be able to:

Write and execute browser automation scripts.

Test web applications efficiently with minimal manual effort.

Integrate Selenium with testing tools to build comprehensive test suites.

Automate repetitive browser tasks to save time and effort.

Learning Selenium is not just achievable—it’s exciting and rewarding. Whether you’re a beginner or an enthusiast, this structured approach will help you grasp the basics quickly and progress to more advanced levels. In a matter of weeks, you’ll be automating browser tasks, testing websites, and building projects that showcase your newfound skills.

So, why wait? Start your Selenium journey today and open the door to endless possibilities in web automation and testing!

0 notes

Text

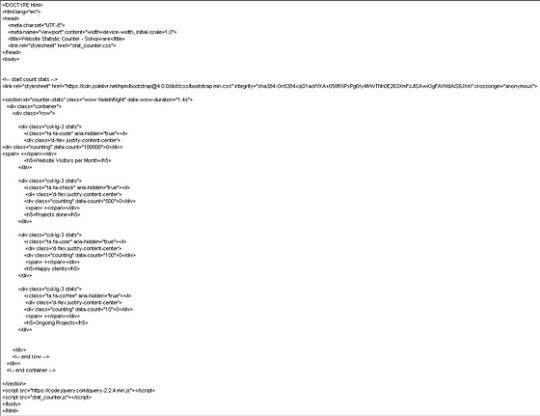

How To Create A Statistic Counter For Your Website Using HTML, CSS & JavaScript — Sohojware

Do you ever wonder how many visitors your website attracts? Or perhaps you’re curious about how many times a specific button is clicked? Website statistics counters provide valuable insights into user behavior, and statistic counters are a fantastic way to visualize this data. In this comprehensive guide by Sohojware, we’ll delve into creating a basic statistic counter using HTML, CSS, and JavaScript.

This guide is tailored for users in the United States who want to enhance their website with an engaging statistic counter. Whether you’re a seasoned developer or just starting out, this tutorial will equip you with the necessary steps to implement a statistic counter on your website.

Why Use a Statistic Counter?

Website statistic counters offer a multitude of benefits. Here’s a glimpse into why they’re so valuable:

Track Visitor Engagement: Statistic counters provide real-time data on how many visitors your website receives. This information is crucial for understanding your website’s traffic patterns and gauging its overall effectiveness.

Monitor User Interaction: By placing statistic counters strategically on your website (e.g., near buttons or downloads), you can track how often specific elements are interacted with. This allows you to identify areas that resonate with your audience and areas for improvement.

Boost User Confidence: Well-designed statistic counters can showcase the popularity of your website, fostering trust and credibility among visitors. Imagine a counter displaying a high number of visitors — it subconsciously assures users that they’ve landed on a valuable resource.

Motivate Action: Strategic placement of statistic counters can encourage visitors to take desired actions. For instance, a counter displaying the number of downloads for a particular resource can entice others to download it as well.

Setting Up the Project

Before we dive into the code, let’s gather the necessary tools:

Text Editor: Any basic text editor like Notepad (Windows) or TextEdit (Mac) will suffice. For a more feature-rich experience, consider code editors like Visual Studio Code or Sublime Text.

Web Browser: You’ll need a web browser (e.g., Chrome, Firefox, Safari) to view the final result.

Once you have these tools ready, let’s create the files for our project:

Create a folder named “statistic-counter”.

Within the folder, create three files:

Building the HTML Structure

Let’s break down the code:

DOCTYPE declaration: Specifies the document type as HTML.

HTML tags: The and tags define the root element of the HTML document.

Lang attribute: Specifies the document language as English (en).

Meta tags: These tags provide metadata about the webpage, including character encoding (charset=UTF-8) and viewport configuration (viewport) for optimal display on various devices.

Title: Sets the title of the webpage displayed on the browser tab as “Website Statistic Counter — Sohojware”.

Link tag: Links the external CSS stylesheet (style.css) to the HTML document.

Body: The tag contains the content displayed on the webpage.

Heading: The tag creates a heading element with the text “Website Statistic Counter”.

Counter container: The element with the ID “counter-container” serves as a container for the counter itself.

Counter span: The element with the ID “counter” displays the numerical value of the statistic counter. The initial value is set to 0.

Script tag: The tag references the external JavaScript file (script.js), which will contain the logic for updating the counter

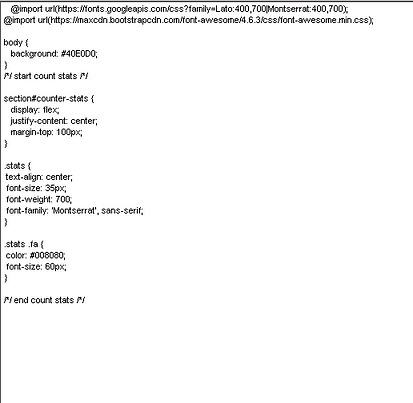

Styling the Counter

Let’s break down the CSS styles:

Body: Sets the font family for the entire body and centers the content.

Heading: Adds a bottom margin to the heading for better spacing.

Counter container: Styles the container with a border, padding, width, and centers it horizontally.

Counter: Sets the font size and font weight for the counter element, making it prominent.

Implementing the JavaScript Logic

Let’s break down the JavaScript code:

Variable declaration: Declares variables counter and count. counter references the HTML element with the ID “counter”, and count stores the current counter value.

updateCounter function: Defines a function named updateCounter that increments the count variable and updates the text content of the counter element.

setInterval: Calls the updateCounter function every 1000 milliseconds (1 second), creating a continuous update effect.

Running the Counter

Save all the files and open the index.html file in your web browser. You should see a webpage with the heading “Website Statistic Counter” and a counter that increments every second.

Customization and Enhancements

This is a basic example of a statistic counter. You can customize it further by:

Changing the counter speed: Modify the setInterval interval to adjust how frequently the counter updates.

Adding a start/stop button: Implement a button to start and stop the counter.

Displaying different units: Instead of a raw number, display the counter in units like “views” or “downloads”.

Integrating with analytics tools: Connect the counter to analytics tools like Google Analytics to track more detailed statistics.

Styling the counter: Experiment with different CSS styles to customize the appearance of the counter.

FAQs

1. Can I use a statistic counter to track specific events on my website?

Yes, you can. By placing statistic counters near buttons or links, you can track how often those elements are clicked or interacted with.

2. How often should I update the counter?

The update frequency depends on your specific use case. For a real-time counter, updating every second might be suitable. For less frequent updates, you can increase the interval.

3. Can I customize the appearance of the counter?

Absolutely! You can modify the CSS styles to change the font, color, size, and overall appearance of the counter.

4. Is it possible to integrate a statistic counter with other website elements?

Yes, you can integrate statistic counters with other elements using JavaScript. For example, you could display the counter value within a specific section or trigger other actions based on the counter’s value.

5. How can I ensure the accuracy of the statistic counter?

While JavaScript can provide a reliable way to track statistics, it’s essential to consider potential limitations. Factors like browser caching, ad blockers, and user scripts can influence the accuracy of the counter. If you require highly accurate statistics, it’s recommended to use server-side tracking mechanisms or analytics tools.

By following these steps and exploring the customization options, you can create a dynamic and informative statistic counter that enhances your website’s user experience and provides valuable insights into your audience’s behavior.

#sohojware#web development#web design#appsdevelopment#software development#css#html#java script#programming languages

1 note

·

View note

Text

Basics of HTML

HTML (HyperText Markup Language) is the standard language used to create web pages. It provides the structure and content of a webpage, which is then styled with CSS and made interactive with JavaScript. Let’s go through the basics of HTML:

1. HTML Document Structure

An HTML document starts with a declaration and is followed by a series of elements enclosed in tags:<!DOCTYPE html> <html> <head> <title>My First Webpage</title> </head> <body> <h1>Welcome to My Website</h1> <p>This is a paragraph of text on my first webpage.</p> </body> </html>

<!DOCTYPE html>: Declares the document type and version of HTML. It helps the browser understand that the document is written in HTML5.

<html>: The root element that contains all other HTML elements on the page.

<head>: Contains meta-information about the document, like its title and links to stylesheets or scripts.

<title>: Sets the title of the webpage, which is displayed in the browser's title bar or tab.

<body>: Contains the content of the webpage, like text, images, and links.

2. HTML Tags and Elements

Tags: Keywords enclosed in angle brackets, like <h1> or <p>. Tags usually come in pairs: an opening tag (<h1>) and a closing tag (</h1>).

Elements: Consist of a start tag, content, and an end tag. For example:

<h1>Hello, World!</h1>

3. HTML Attributes

Attributes provide additional information about HTML elements. They are always included in the opening tag and are written as name="value" pairs:<a href="https://www.example.com">Visit Example</a> <img src="image.jpg" alt="A descriptive text">

href: Used in the <a> (anchor) tag to specify the link's destination.

src: Specifies the source of an image in the <img> tag.

alt: Provides alternative text for images, used for accessibility and if the image cannot be displayed.

4. HTML Headings

Headings are used to create titles and subtitles on your webpage:<h1>This is a main heading</h1> <h2>This is a subheading</h2> <h3>This is a smaller subheading</h3>

<h1> to <h6>: Represents different levels of headings, with <h1> being the most important and <h6> the least.

5. HTML Paragraphs

Paragraphs are used to write blocks of text:<p>This is a paragraph of text. HTML automatically adds some space before and after paragraphs.</p>

<p>: Wraps around blocks of text to create paragraphs.

6. HTML Line Breaks and Horizontal Lines

Line Break (<br>): Used to create a line break (new line) within text.

Horizontal Line (<hr>): Used to create a thematic break or a horizontal line:

<p>This is the first line.<br>This is the second line.</p> <hr> <p>This is text after a horizontal line.</p>

7. HTML Comments

Comments are not displayed in the browser and are used to explain the code:<!-- This is a comment --> <p>This text will be visible.</p>

<!-- Comment -->: Wraps around text to make it a comment.

8. HTML Links

Links allow users to navigate from one page to another:<a href="https://www.example.com">Click here to visit Example</a>

<a>: The anchor tag creates a hyperlink. The href attribute specifies the URL to navigate to when the link is clicked.

9. HTML Images

Images can be embedded using the <img> tag:<img src="image.jpg" alt="Description of the image">

<img>: Used to embed images. The src attribute specifies the image source, and alt provides descriptive text.

10. HTML Lists

HTML supports ordered and unordered lists:

Unordered List (<ul>):

. <ul> <li>Item 1</li> <li>Item 2</li> <li>Item 3</li> </ul>

Ordered List (<ol>):

<ol> <li>First item</li> <li>Second item</li> <li>Third item</li> </ol>

<ul>: Creates an unordered list with bullet points.

<ol>: Creates an ordered list with numbers.

<li>: Represents each item in a list.

11. HTML Metadata

Metadata is data that provides information about other data. It is placed within the <head> section and includes information like character set, author, and page description:<meta charset="UTF-8"> <meta name="description" content="An example of HTML basics"> <meta name="keywords" content="HTML, tutorial, basics"> <meta name="author" content="Saide Hossain">

12. HTML Document Structure Summary

Here’s a simple HTML document combining all the basic elements:<!DOCTYPE html> <html> <head> <title>My First HTML Page</title> <meta charset="UTF-8"> <meta name="description" content="Learning HTML Basics"> <meta name="keywords" content="HTML, basics, tutorial"> <meta name="author" content="Saide Hossain"> </head> <body> <h1>Welcome to My Website</h1> <p>This is my first webpage. I'm learning HTML!</p> <p>HTML is easy to learn and fun to use.</p> <hr> <h2>Here are some of my favorite websites:</h2> <ul> <li><a href="https://www.example.com">Example.com</a></li> <li><a href="https://www.anotherexample.com">Another Example</a></li> </ul> <h2>My Favorite Image:</h2> <img src="https://images.pexels.com/photos/287240/pexels-photo-287240.jpeg?auto=compress&cs=tinysrgb&w=1260&h=750&dpr=1" width="300" alt="A beautiful view"> <hr> <p>Contact me at <a href="mailto:[email protected]">[email protected]</a></p> </body> </html>

Key Takeaways

HTML is all about using tags to structure content.

The basic building blocks include headings, paragraphs, lists, links, images, and more.

Every HTML document needs a proper structure, starting with <!DOCTYPE html> and wrapping content within <html>, <head>, and <body> tags.

With these basics, you can start building your web pages!

Source: HTML TUTE BLOG

0 notes

Text

AngularJS Training in India

Introduction

As the demand for dynamic web applications continues to grow, AngularJS, a powerful JavaScript framework developed by Google, remains a popular choice for developers. It simplifies the process of building complex web applications by extending HTML's syntax, making it an essential tool for front-end developers. In India, the tech industry is booming, and there is a significant demand for skilled AngularJS developers. This has led to an increase in the availability of AngularJS training programs across the country.

Why Choose AngularJS?

AngularJS offers a plethora of features that make it an attractive framework for web development:

Two-Way Data Binding: Automatically synchronizes data between the model and the view components.

Dependency Injection: Simplifies the management of dependencies, making the code more modular and easier to maintain.

Directives: Extend HTML by creating custom tags and attributes.

MVC Architecture: Separates the application into distinct components, enhancing maintainability and scalability.

Community Support: Being backed by Google ensures regular updates and a large community for support.

The Growing Demand for AngularJS Skills

In India, the IT industry is one of the fastest-growing sectors, and web development is a critical component of this growth. Companies ranging from startups to multinational corporations are seeking skilled AngularJS developers to build responsive and efficient web applications. This demand is driven by the need for businesses to provide seamless digital experiences to their users.

Top AngularJS Training Institutes in India

Several training institutes in India offer comprehensive AngularJS courses designed to equip developers with the necessary skills. Here are some of the top institutes:

Edureka: Known for its high-quality online courses, Edureka offers a detailed AngularJS training program that covers basic to advanced concepts.

Simplilearn: Offers a blended learning approach with both self-paced learning and live instructor-led sessions.

Zeolearn: Provides hands-on training with real-world projects and assignments.

Intellipaat: Known for its comprehensive curriculum and 24/7 support, Intellipaat's AngularJS course is designed for both beginners and experienced developers.

Coursera: Partnering with top universities and organizations, Coursera offers flexible learning schedules and certificates from recognized institutions.

Benefits of AngularJS Training

Career Advancement: Mastering AngularJS opens up numerous job opportunities in the web development sector.

Higher Salary: Skilled AngularJS developers are in high demand, leading to better salary prospects.

Versatility: AngularJS knowledge can be applied across various industries, from e-commerce to finance.

Community and Resources: Access to a vast community and numerous resources, including tutorials, forums, and libraries, helps in continuous learning and problem-solving.

Conclusion

Given the growing demand for dynamic web applications, AngularJS remains a vital skill for web developers in India. With numerous training institutes offering specialized courses, aspiring developers have ample opportunities to master this framework and advance their careers. Whether you are a beginner looking to enter the field of web development or an experienced developer aiming to enhance your skills, AngularJS training in India can provide the knowledge and expertise needed to succeed in the competitive tech industry.

#angular js development services#AngularJS training#angular js development#angular#angular js training institute

0 notes

Text

How To Scrape Yelp Reviews: A Python Tutorial For Beginners

Yelp is an American company that offers information about various businesses and specialists' feedback. These are actual client feedback taken from the users of multiple firms or other business entities. Yelp is an important website that houses the largest amount of business reviews on the internet.

As we can see, if we scrape Yelp review data using a tool called a scraper or Python libraries, we can find many useful tendencies and numbers here. This would further be useful for enhancing personal products or changing free clients into paid ones.

Since Yelp categorizes numerous businesses, including those that are in your niche, scraping its data may help you get information about businessmen's names, contact details, addresses, and business types. It makes the search of potential buyers faster.

What is Yelp API?

The Yelp API is a web service set that allows developers to retrieve detailed information about various businesses and reviews submitted by Yelp users. Here's a breakdown of what the Yelp restaurant API offers and how it works:

Access to Yelp's Data

The API helps to access Yelp's database of business listings. This database contains data about businesses, such as their names, locations, phone numbers, operational hours, and customer reviews.

Search Functionality

Business listings can also be searched using an API whereby users provide location, category and rating system. It assists in identifying or filtering particular types of firms or those located in a particular region.

Business Details

The API is also helpful for any particular business; it can provide the price range, photos of the company inside, menus, etc. It is beneficial when concerned with a business's broader perspective.

Reviews

It is possible to generate business reviews, where you can find the review body text and star rating attributed to a certain business and date of the review. This is useful in analyzing customers' attitude and their responses to specific products or services.

Authentication

Before integrating Yelp API into your application, there is an API key that needs to be obtained by the developer who will be using the Yelp API to access the Yelp platform.

Rate Limits

The API is how your application connects to this service, and it has usage limits, whereby the number of requests is limited by a certain time frame. This will enable the fair use of the system and prevent straining of the system by some individuals.

Documentation and Support

As anticipated there is a lot of useful information and resources that are available for the developers who want to use Yelp API in their applications. This covers example queries, data structures the program employs, and other features that make the program easy to use.

What are the Tools to Scrape Yelp Review Data?

Web scraping Yelp reviews involves using specific tools to extract data from their website. Here are some popular tools and how they work:

BeautifulSoup

BeautifulSoup is a Python library that helps you parse HTML and XML documents. It allows you to navigate and search through a webpage to find specific elements, like business names or addresses. For example, you can use BeautifulSoup to pull out all the restaurant names listed on a Yelp page.

Selenium

Selenium is another Python library that automates web browsers. It lets you interact with web pages just like a human would, clicking buttons and navigating through multiple pages to collect data. Selenium can be used to automate the process of clicking through different pages on Yelp and scraping data from each page.

Scrapy

Scrapy is a robust web scraping framework for Python. It's designed to efficiently scrape large amounts of data and can be combined with BeautifulSoup and Selenium for more complex tasks. Scrapy can handle more extensive scraping tasks, such as gathering data from multiple Yelp pages and saving it systematically.

ParseHub

ParseHub is a web scraping tool that requires no coding skills. Its user-friendly interface allows you to create templates and specify the data you want to extract. For example, you can set up a ParseHub project to identify elements like business names and ratings on Yelp, and the platform will handle the extraction.

How to Avoid Getting Blocked While Scraping Yelp?

Yelp website is constantly changing to meet users' expectations, which means the Yelp Reviews API you built might not work as effectively in the future.

Respect Robots.txt

Before you start scraping Yelp, it's essential to check their robots.txt file. This file tells web crawlers which parts of the site can be accessed and which are off-limits. By following the directives in this file, you can avoid scraping pages that Yelp doesn't want automated access to. For example, it might specify that you shouldn't scrape pages only for logged-in users.

User-Agent String

When making requests to Yelp's servers, using a legitimate user-agent string is crucial. This string identifies the browser or device performing the request. When a user-agent string mimics the appearance of a legitimate browser, it is less likely to be recognized as a bot. Avoid using the default user agent provided by scraping libraries, as they are often well-known and can quickly be flagged by Yelp's security systems.

Request Throttling

Implement request throttling to avoid overwhelming Yelp's servers with too many requests in a short period of time. This means adding delays between each request to simulate human browsing behavior. You can do this using sleep functions in your code. For example, you might wait a few seconds between each request to give Yelp's servers a break and reduce the likelihood of being flagged as suspicious activity.

import time

import requests

def make_request(url):

# Mimic a real browser's user-agent

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'}

response = requests.get(url, headers=headers)

if response.status_code == 200:

# Process the response

pass

else:

# Handle errors or blocks

pass

# Wait for 2 to 5 seconds before the next request

time.sleep(2 + random.random() * 3)

# Example usage

make_request('https://www.yelp.com/biz/some-business')

Rotation of IP

Use proxy servers to cycle your IP address and lower your risk of getting blacklisted if you are sending out a lot of queries. An Example of Python Using Proxies:

import requests

proxies = {

'http': 'http://your_proxy_address:port',

'https': 'https://your_proxy_address:port',

}

response = requests.get('https://www.yelp.com/biz/some-business', proxies=proxies)

Be Ready to Manage CAPTCHAs

Yelp could ask for a CAPTCHA to make sure you're not a robot. It can be difficult to handle CAPTCHAs automatically, and you might need to use outside services.

Make Use of Headless Browsers

Use a headless browser such as Puppeteer or Selenium if you need to handle complicated interactions or run JavaScript. Examples of Python Selenium:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

options = Options()

options.headless = True

driver = webdriver.Chrome(options=options)

driver.get('https://www.yelp.com/biz/some-business')

# Process the page

driver.quit()

Adhere to Ethical and Legal Considerations

It's important to realize that scraping Yelp might be against their terms of service. Always act morally and think about the consequences of your actions on the law.

API as a Substitute

Verify whether Yelp provides a suitable official API for your purposes. The most dependable and lawful method of gaining access to their data is via the Yelp restaurant API.

How to Scrape Yelp Reviews Using Python

Yelp reviews API and data scraper could provide insightful information for both companies and researchers. In this tutorial, we'll go over how to ethically and successfully scrape Yelp reviews using Python.

The Yelp Web Scraping Environment

The code parses HTML using lxml and manages HTTP requests using Python requests.

Since requests and lxml are external Python libraries, you will need to use pip to install them individually. This code may be used to install requests and lxml.

pip install lxml requests

Data Acquired From Yelp

To obtain these facts, the code will scrape Yelp's search results page.

Company name

Rank

Number of reviews

Ratings

Categories

Range of prices

Yelp URL

In the JSON data found within a script tag on the search results page, you'll discover all these details. You won't need to navigate through individual data points using XPaths.

Additionally, the code will make HTTPS requests to each business listing's URL extracted earlier and gather further details. It utilizes XPath syntax to pinpoint and extract these additional details, such as:

Name

Featured info

Working hours

Phone number

Address

Rating

Yelp URL

Price Range

Category

Review Count

Longitude and Latitude

Website

The Yelp Web Scraping Code

To scrape Yelp reviews using Python, begin by importing the required libraries. The core libraries needed for scraping Yelp data are requests and lxml. Other packages imported include JSON, argparse, urllib.parse, re, and unicodecsv.

JSON: This module is essential for parsing JSON content from Yelp and saving the data to a JSON file.

argparse: Allows passing arguments from the command line, facilitating customization of the scraping process.

unicodecsv: Facilitates saving scraped data as a CSV file, ensuring compatibility with different encoding formats.

urllib.parse: Enables manipulation of the URL string, aiding in constructing and navigating through URLs during scraping.

re: Handles regular expressions, which are useful for pattern matching and data extraction tasks within the scraped content.

Content Source https://www.reviewgators.com/beginners-guide-to-scrape-yelp-reviews.php

1 note

·

View note

Text

FakeNet-NG: Powerful Malware Analysis and Network Simulation

What is FakeNet-NG?

A dynamic network analysis tool called FakeNet-NG mimics network services and records network requests to help in malware research. The FLARE team is dedicated to improving and maintaining the tool to increase its functionality and usability. Though highly configurable and platform-neutral, FakeNet needed a more user-friendly and intuitive presentation of the network data it collected so you could find pertinent Network-Based Indicators (NBIs) more quickly. Google expanded FakeNet-NG to provide HTML-based output that allows you to examine, explore, and share collected network data in order to solve this problem and further improve usability.

In order to overcome this difficulty and improve usability even further, they expanded the functionality of FakeNet-NG to provide HTML-based output, which lets you see, investigate, and share network data that has been gathered.

Engaging HTML-Based Results

An HTML page with inline CSS and Javascript supports the new interactive output of FakeNet-NG. FakeNet-NG’s current text-based output and the new HTML-based output. Using a Jinja2 template that it fills with the network data it has collected, FakeNet-NG creates each report. Your preferred browser can be used to view the final report once it has been saved to the current working directory. To analyse the recorded network activity together, you may also distribute this file to other people.

Captured network data can be chosen, filtered, and copied using the HTML interface.

Network data that has been gathered can be chosen, filtered, and copied using the HTML interface.

Creation and Execution

Planning and Execution

Insides of FakeNet-NG

FakeNet-NG Tutorial

The three main components that make up FakeNet-NG’s modular architecture are as follows:

Diverter: The target system’s main component intercepts all incoming and outgoing network traffic. It sends these packets to the Proxy Listener by default so that it can process them further.

Between the Diverter and the protocol-specific Listeners lies a component known as the Proxy Listener. Based on variables including port, protocol, and data content, it examines application layer data to determine which Listener is best for every network packet.

Protocol-specific Listeners: These specialized Listeners process requests unique to their particular protocols and produce responses that resemble authentic server behavior. Examples of these specialized Listeners are HTTP, FTP, and DNS.

Extending NBI Analysis Using FakeNet-NG

It was necessary to enhance essential components in order to record, store, and associate network data with the source activities in order to enable FakeNet-NG to provide thorough and informative reports.

FakeNet-NG Comprised:

Improving data storage: The Diverter keeps track of extra data, such as process IDs, names, and linkages between source ports that were started by the proxy and those that were started by the original.

Presenting NBI mapping: The Diverter allows for the unambiguous attribution of network activity by mapping network data to source processes.

Encouraging information exchange: To ensure precise data monitoring, the Proxy Listener sends pertinent packet metadata to the Diverter.

The interactive HTML-based output is created by combining the data that is captured by each component using FakeNet-NG

NetworkMode: Choose the network mode that FakeNet-NG should operate in.

NetworkMode: Choose which network mode to use while launching FakeNet-NG.

Acceptable configurations.

Suitable configurations.

SingleHost: control traffic coming from nearby processes.

Manipulate traffic from other systems with MultiHost.

Auto: Select the NetworkMode that works best for the platform right now.

Presently, not every platform supports every NetworkMode configuration.

This is how support is currently standing:

Only Windows supports OneHost

With the exception of process, port, and host blacklisting and whitelisting, Linux supports both MultiHost and, in an experimental state, SingleHost mode.

To access Linux’s MultiHost mode and Windows’ SingleHost mode, leave this set to Auto for the time being.

DNS-related setting and Windows implementation:

ModifyLocalDNS – direct the local machine’s DNS service to the DNS listener of FakeNet-NG.

Cease DNS Service: This command ends the DNS client service (Dnscache) on Windows. In contrast to the standard’svchost.exe’ process, this enables FakeNet-NG to observe the real processes that resolve domains.

Linux version

The following settings are supported by the Linux version of Diverter:

LinuxRedirectNonlocal – This tells you which externally facing network interfaces to reroute to FakeNet-NG when you use it to mimic Internet connectivity for a separate host.

Before adding rules for FakeNet-NG, use LinuxFlushIptables to flush all iptables rules.

As long as the Linux Diverter’s termination sequence remains unbroken, the previous rules will be reinstated.

LinuxFlushDnsCommand: If necessary, enter the appropriate command here to clear the DNS resolver cache for your Linux distribution.

Select which detailed debug events to show with the DebugLevel option.

Upcoming projects

However think there is still room to improve the HTML-based output from FakeNet-NG so that analysts can benefit even more. A communication graph, network behavior graphically would be a crucial contribution. With edges connecting process nodes to other nodes like IP addresses or domain names, this widely used approach maps processes to the corresponding network requests. You might quickly and easily grasp a program’s communication patterns by using FakeNet-NG with this kind of visualization.

Get rid of unnecessary network traffic: Reduce noise produced by safe Windows services and apps so that the most important network information is highlighted.

Make sure the HTML report includes ICMP traffic: Present a more thorough overview of network activity by showcasing network data based on ICMP.

Add pre-set filters and filtering options: Provide pre-set filters and easy-to-use filtering tools to omit typical Microsoft network traffic.

Enhance the usability of exported network data by giving the user the option to select the information that should be included in the exported Markdown data. This will improve the formatting of the Markdown data.

In conclusion

As the go-to tool for dynamic network analysis in malware research, FakeNet-NG keeps getting better. It intend to improve its usefulness by providing interactive HTML-based output, which will enable you to traverse and analyses even the largest and most intricate network data grabs in a clear, simple, and aesthetically pleasing manner.

To make your analysis of dynamic network data more efficient, they invite you to investigate the new HTML-based output and take advantage of its filtering, selection, and copying features. For the most recent version of FakeNet-NG, download it from our Github repository, make contributions to the project, or leave comments.

Read more on govindhtech.com

0 notes

Text

Mastering Xpath in Selenium: All Tactics and Examples

Mastering Xpath in Selenium: All Tactics and Examples

Are you looking to level up your Selenium Automation Python skills? Understanding XPath is crucial for locating elements on a web page, especially when other locating strategies fall short. In this comprehensive guide, we'll dive deep into XPath in Selenium Automation with Python , exploring various tactics and providing examples to help you master this powerful tool.

Table of Contents

Sr#

Headings

1

What is XPath?

2

Why Use XPath in Selenium?

3

Basic XPath Expressions

4

Using XPath Axes

5

XPath Functions

6

Combining XPath Expressions

7

Handling Dynamic Elements

8

Best Practices for Using XPath in Selenium

9

Examples of XPath in Selenium

10

Conclusion

What is XPath?

XPath (XML Path Language) is a query language used for selecting nodes from an XML document. In the context of python selenium tutorial , XPath is used to locate elements on a web page based on their attributes, such as id, class, name, etc. It provides a powerful way to navigate the HTML structure of a web page and interact with elements.

Why Use XPath in Selenium?

XPath is particularly useful in Selenium when other locating strategies, such as id or class name, are not available or reliable. It allows you to locate elements based on their position in the HTML structure, making it a versatile tool for python automation testing .

Basic XPath Expressions

XPath expressions can be used to locate elements based on various criteria, such as attributes, text content, and position in the HTML structure. Here are some basic XPath expressions:

//tagname: Selects all elements with the specified tag name.

//*[@attribute='value']: Selects all elements with the specified attribute and value.

//tagname[@attribute='value']: Selects elements with the specified tag name, attribute, and value.

Using XPath Axes

XPath axes allow you to navigate the HTML structure relative to a selected node. Some common axes include:

ancestor: Selects all ancestors of the current node.

descendant: Selects all descendants of the current node.

parent: Selects the parent of the current node.

following-sibling: Selects all siblings after the current node.

XPath Functions

XPath provides several functions that can be used to manipulate strings, numbers, and other data types. Some common functions include:

contains(): Checks if a string contains a specified substring.

text(): Selects the text content of a node.

count(): Counts the number of nodes selected by an XPath expression.

Combining XPath Expressions

XPath expressions can be combined using logical operators such as and, or, and not to create more complex selectors. This allows you to target specific elements based on multiple criteria.

Handling Dynamic Elements

XPath can be used to handle dynamic elements, such as those generated by JavaScript or AJAX. By using XPath expressions that are based on the structure of the page rather than specific attributes, you can locate and interact with these elements reliably.

Best Practices for Using XPath in Selenium

Use the shortest XPath expression possible to avoid brittle tests.

Use relative XPath expressions whenever possible to make your tests more robust.

Use the // shortcut sparingly, as it can lead to slow XPath queries.

Examples of XPath in Selenium

Let's look at some examples of using XPath in Selenium to locate elements on a web page:

Locating an element by id: //*[@id='elementId']

Locating an element by class name: //*[contains(@class,'className')]

Locating an element by text content: //*[text()='some text']

Conclusion

XPath is a powerful tool for locating elements on a web page in python for automation testing . By understanding the basics of XPath expressions, axes, functions, and best practices, you can improve the reliability and maintainability of your automation tests. Start mastering XPath today and take your Selenium skills to the next level!

Frequently Asked Questions

What is XPath in Selenium?

XPath in Selenium automation testing in python is a query language used to locate elements on a web page based on their attributes, such as id, class, name, etc. It provides a powerful way to navigate the HTML structure of a web page and interact with elements.

Why should I use XPath in Selenium?

XPath is particularly useful in Selenium when other locating strategies, such as id or class name, are not available or reliable. It allows you to locate elements based on their position in the HTML structure, making it a versatile tool for Automation Testing with Python .

How do I use XPath in Selenium?

XPath expressions can be used to locate elements based on various criteria, such as attributes, text content, and position in the HTML structure. You can use XPath axes, functions, and logical operators to create complex selectors.

What are some best practices for using XPath in Selenium?

Some best practices for using XPath in Selenium include using the shortest XPath expression possible, using relative XPath expressions whenever possible, and avoiding the // shortcut to improve query performance.

Can XPath be used to handle dynamic elements in Selenium?

Yes, XPath can be used to handle dynamic elements, such as those generated by JavaScript or AJAX. By using XPath expressions that are based on the structure of the page rather than specific attributes, you can locate and interact with these elements reliably.

0 notes

Text

HTML Tutorial

HTML, short for Hyper Text Markup Language, is the backbone of web pages, serving as the structural foundation that browsers interpret to display content. It utilizes a series of tags to define different elements such as headings, paragraphs, images, links, and more. These tags structure the content, shaping the visual and functional aspects of a webpage.

Key Elements of HTML:

Tags and Elements: HTML relies on tags like <p> for paragraphs, <h1-h6> for headings, <img> for images, and <a> for links, each serving a unique purpose in structuring content.

Attributes: These add additional information to HTML elements, like specifying image sources or defining links’ destinations and class id etc.

SEO Techniques in HTML:

Optimizing HTML content for search engines is vital for visibility. Implementing certain techniques enhances a website’s ranking and organic traffic:

Title Tags and Meta Descriptions: Crafting unique, keyword-rich title tags (<title>) and meta descriptions (<meta>) increases visibility in search engine results.

Semantic HTML: Utilizing proper tags for content helps search engines understand page structure, improving indexing and search relevance.

Mobile Optimization: Ensuring HTML is responsive and mobile-friendly is crucial for improved rankings, considering Google’s mobile-first indexing.

Content Marketing and HTML:

Combining HTML with a robust content strategy amplifies marketing efforts:

Structured Content: Properly structured HTML aids in presenting content in an organized manner, enhancing readability and user experience.

Rich Snippets: Employing structured data markup in HTML can enable rich snippets, enhancing a website’s appearance in search results and attracting more clicks.

Social Media Engagement and HTML:

HTML plays a role in social media strategies:

Open Graph Protocol: Implementing Open Graph meta tags in HTML allows for better control over how content appears when shared on platforms like Facebook, enhancing visibility and engagement.

Twitter Cards: Using Twitter Card markup in HTML enables richer media experiences when shared on Twitter, increasing engagement potential.

Website Optimization with HTML:

Optimizing websites involves HTML-centric strategies:

Page Load Speed: Well-structured HTML contributes to faster page loading, positively impacting user experience and SEO rankings.

Canonical Tags: Utilizing canonical tags in HTML prevents duplicate content issues, consolidating page authority and avoiding SEO penalties.

HTML forms the backbone of the internet, wielding immense power in shaping the digital landscape. Understanding its nuances, leveraging SEO techniques, content marketing strategies, social media engagement, and website optimization are pivotal steps toward enhancing visibility and driving organic traffic. Read Full Blog Click Here

0 notes

Text

Code Less and Do More with AngularJS Top Features

Are you seeking to craft cutting-edge, high-performance web applications that stand out in today’s digital landscape?

AngularJS development is your gateway to dynamic, scalable, and robust web solutions. Embracing this innovative framework can propel your business towards its objectives by accelerating development timelines and optimizing costs.

In this article, we delve into the world of AngularJS, exploring its manifold benefits and guiding you to make informed decisions aligned with your project’s unique needs.

A Quick Look at AngularJS

AngularJS emerges as a beacon among open-source front-end frameworks, designed to empower developers in creating captivating web experiences. Championed by Google, this framework continually evolves, incorporating new features to amplify its capabilities. What sets Angular apart is its ability to seamlessly expand HTML syntax through a script tag, offering a clear and concise expression of an application’s components.

An inherent strength of AngularJS lies in its foundation in familiar HTML and JavaScript. This eliminates the need for developers to grapple with new languages or syntax, streamlining the development process for robust web solutions.

As any reputable AngularJs Development Company aims to provide customized solutions for AngularJS Web Application Development and focus on excelling in providing top-notch Angular Front-end Development Services. They specialize in providing Custom AngularJS Development solutions that will flawlessly complement your distinct vision.

Exploring the Unique Benefits of AngularJS App Development Services

Simple to Learn, Increased Possibilities: AngularJS isn’t just a framework; it’s an accessible gateway for developers acquainted with HTML, JavaScript, and CSS, broadening horizons within the dynamic realm of web development. With a plethora of free online courses and tutorials, mastering AngularJS not only equips developers but also accelerates web application development, saving invaluable time along the way.

Seamless Two-Way Binding: AngularJS’s built-in two-way binding capability easily synchronizes the model and view. Changes made to the data in the model are immediately reflected in the view and vice versa, making the presentation layer easier to use and providing a non-intrusive method of UI construction, which in turn expedites the development process.

Enabling Single Page Applications (SPAs): AngularJS makes it possible to create SPAs very quickly, turning web pages into slick, app-like experiences. SPAs built with AngularJS provide quick transitions, great user experiences, platform compatibility, and easier maintenance by dynamically updating content rather than refreshing full pages.

Declarative UI for Collaboration: AngularJS, which creates templates with HTML, is a declarative framework that encourages communication between developers and designers. Developers use declarative binding syntax with AngularJS-specific elements and attributes, and designers concentrate on creating an intuitive user interface (UI) to improve readability and manipulation overall.

Google Endorsement: With Google’s support, AngularJS gains legitimacy and receives ongoing improvements from a skilled engineering staff. This support strengthens its dependability and creates a lively community where developers exchange ideas and experiences, which enhances the ecosystem even more.

Effortless MVC Pattern Management: By automatically integrating the Model, View, and Control (MVC) components, AngularJS simplifies code integration. Developers find it easier to traverse and manage both the UI and database when application data management, data display, and relationship management are made simpler.

Sturdy and Enhanced with Features Framework: Known for its resilience, AngularJS uses dependency injection, directives, and the MVC framework to speed up front-end development. Because of its open nature, developers can modify HTML syntax and create more potent client-side apps.

Real-Time Testing Expertise: AngularJS enables developers to thoroughly test apps by enabling both end-to-end and unit testing. Dependency injection is one of its testing features that guarantees careful oversight of programme components. This helps with mistake detection and resolution, which improves the overall quality of the application.

In summary:

Staying ahead in a changing company environment requires proactive product or service connections with your target market in addition to achieving market expectations. Front-end apps are essential to this connectedness because businesses and their markets need to work together in harmony.

AngularJS is more than simply an app development tool; it’s about connecting companies, encapsulating changing market conditions, and guaranteeing a competitive advantage. Businesses may match their plans with AngularJS to effectively connect with a continually expanding audience, resulting in success in a constantly changing ecosystem.

0 notes

Text

Enhancing User Experience with React Bootstrap Spinner Component

In web development, creating responsive and user-friendly interfaces is paramount. When it comes to showing loading or processing states in a web application, the React Bootstrap Spinner component is a valuable tool. It allows developers to add stylish and customizable spinners that enhance the user experience and provide visual feedback during data fetching or processing.

The React Bootstrap library is built on top of the popular Bootstrap framework and extends its components to seamlessly integrate with React applications. The Spinner component, a part of React Bootstrap, simplifies the process of incorporating loading indicators into your project. Here's a closer look at why the React Bootstrap Spinner is a great addition to your development toolkit

Easy Integration:

The React Bootstrap Spinner component can be easily integrated into your React application by installing the necessary package and importing the component.

Customization:

Developers have the flexibility to customize the appearance of the spinner to match the design and branding of their application. This includes options for size, animation style, and color.

Accessibility:

React Bootstrap components are designed with accessibility in mind, ensuring that they can be used by all users, including those with disabilities. This includes proper semantic HTML structure and ARIA attributes.

Wide Range of Use Cases:

Spinners are commonly used to indicate that data is being loaded, submitted, or processed. This visual feedback is essential to keep users informed and engaged while waiting for content to appear.

Responsiveness:

React Bootstrap is known for its responsive design. The Spinner component adapts well to various screen sizes and resolutions, making it suitable for both desktop and mobile applications.

Integration with State Management:

The Spinner component can be easily integrated with popular state management libraries like Redux or React Context API. This enables developers to trigger and control the spinner based on the application's state.

Open Source and Community Support:

React Bootstrap is open-source and has an active community of contributors and users. This means that developers can find plenty of resources, tutorials, and community support when working with React Bootstrap components, including the Spinner.

More For Info:-

spinner react bootstrap

bootstrap loading page

0 notes

Text

A Guide to Basics of HTML from Start to Finish

1. Understanding HTML;

HTML Tags HTML is made up of markers, which are enclosed in angle classes(<>). markers define the structure and rudiments of a web runner.

HTML rudiments rudiments are composed of an opening label, content, and a ending label. For illustration, Content goes then.

Attributes numerous HTML markers have attributes that give fresh information about the element. Attributes are always specified in the opening label.

2. Basic Structure;

Every HTML document should start with a protestation to specify the interpretation of HTML being used.

The introductory structure of an HTML document is as follows

html Copy law

<!DOCTYPE html><html> <head> <title>Page Title</title> </head> <body> <h1>Heading 1</h1> <p>Paragraph text goes here.</p> </body> </html>

3. Head Section;

The section contains metadata about the document, similar as the runner title, character encoding, and links to external coffers.

The element sets the title of the web runner that appears in the cybersurfer's tab.

4. Body Section;

The section contains the visible content of your web runner.

Common textbook rudiments include headlines(,,etc.), paragraphs(), and lists(,,).

5. Links;

produce hyperlinks using the( anchor) element. For illustration

html Copy law

<a href="https://www.example.com">Visit Example</a>

6. Images;

Embed images using the element. Specify the image source using the src trait.

html Copy law

<img src="image.jpg" alt="Description of the image">

7. Lists;

produce ordered lists with and list particulars with. For unordered lists, use.

8. Forms;

HTML forms allow stoner input. Use the element to produce a form, and rudiments like,, and to collect data.

9. Tables;

Use the element to produce tables. Tables correspond of rows(), table heads(), and table data cells().

10. Comments;

You can add commentary to your HTML law using the syntax. commentary aren't displayed in the cybersurfer but can be helpful for attestation.

11. HTML Validation;

It's essential to write valid HTML to insure proper picture in different cybersurfers. You can validate your HTML using online tools or integrated cybersurfer tools.

12. Document Structure;

As your web runner grows, consider dividing it into different sections using rudiments like,,,,, and for semantic and structural clarity.

13. fresh Resources;

HTML is just the morning. To enhance your web runners, you will need to learn CSS( Slinging Style wastes) for styling and JavaScript forinteractivity.Refer to sanctioned attestation and online tutorials to consolidate your knowledge and chops in HTML and webdevelopment.

This companion covers the basics of HTML, but there is much further to explore as you dive deeper into web development. Practice is crucial to learning HTML and creating well- structured web runners.

#html#html css#html website#digital marketing#seo services#income#earnonline#profit#earnings#how to earn money#earn money online

1 note

·

View note

Text

Why Mindfire Solutions Prefers React JS for Innovative Frontend Development

As the digital realm continuously expands, a myriad of development tools present themselves to businesses. In this ever-evolving landscape, it's paramount for companies like Mindfire Solutions to align with technologies that not only serve the current needs but also are future-ready. Enter React JS – a game-changer in the frontend development arena.

A Brief Dive into React’s Evolution React, born out of Facebook's innovative lab in 2011, was an answer to the challenges faced while integrating a universal chat feature across the platform. React addressed the commonly experienced DOM race condition bug which was a persistent issue for early web apps.

React's major contributions to the frontend sphere include the introduction of Flux architecture and innovations like 'deterministic view render' that fundamentally changed how developers approached view rendering.

What Exactly is React JS? In simple terms, React is an open-source JavaScript library used to build user-friendly, efficient frontend interfaces for both single and multi-page web applications. It’s versatility enables integration with other JS libraries, making frontend development not only faster but also more streamlined.

Why Mindfire Solutions Advocates for React JS At Mindfire Solutions, our developers have found myriad reasons to favor React JS for our projects:

Component-Based Design: React’s architecture revolves around reusable components, simplifying UI design and ensuring consistency.

Declarative Approach: This makes the React development process smoother and debugging more efficient.

JSX Integration: JSX simplifies the fusion of HTML structures and JS codes.

Virtual DOM: React's Virtual DOM streamlines updates and modifications, enhancing app performance.

SEO Capabilities: Faster rendering means better SEO, propelling React-powered apps higher on search engine rankings.

Community Support: Being open-source, React boasts an extensive community, which is a goldmine of resources, tutorials, and instant troubleshooting.

React JS in Action – Real-World Applications React’s flexibility has seen its application across various digital platforms. Some notable applications include:

Dashboards: React’s component-based approach and Virtual DOM make it ideal for data visualization dashboards.

E-Commerce Platforms: React’s code reusability and maintainability attributes make it suitable for e-commerce development.

Cross-Platform Mobile Apps: Using React Native, the transition from web apps to mobile applications is seamless.

Social Networks: Platforms like Facebook itself leverage React to optimize client-server interactions and improve overall performance.

Some industry stalwarts like Netflix, Whatsapp, Uber, and of course, Facebook and Instagram, trust React for their frontend development.

Why Choose Mindfire Solutions for React JS Development? While there are numerous frameworks and libraries available, the choice largely depends on project-specific requirements. At Mindfire Solutions, our experienced team ensures that businesses get the best-fit technology solution, and more often than not, React JS stands out as the frontrunner. As experts in React JS Development, we guide businesses towards adopting this futuristic technology tailored to their needs.

Looking for a dependable partner for your next frontend development project? Mindfire Solutions is here to guide the way.

0 notes

Text

How Useful is HTML?

HTML, or HyperText Markup Language, is the foundation of the web. It is a markup language that is used to create and structure web pages. HTML is used to define the content of a web page, such as text, images, and links. It is also used to control the layout and formatting of a web page.

HTML is a very useful language for anyone who wants to create or maintain web pages. It is a relatively easy language to learn, and there are many resources available online to help you get started. HTML is also a versatile language that can be used to create a wide variety of web pages.

Here are some of the benefits of learning HTML:

It is a basic skill that is essential for anyone who wants to work in web development. HTML is the foundation of web development, so it is a necessary skill for anyone who wants to create or maintain web pages.

It is a relatively easy language to learn, even for beginners. HTML is a simple language that uses a limited set of tags to define the content and structure of a web page. There are many tutorials and resources available online that can help you learn HTML.

There are many resources available online to help you learn HTML. There are many websites, tutorials, and books that can help you learn HTML. You can also find many online communities where you can ask questions and get help from other HTML learners.

HTML is used by all major web browsers, so your web pages will be accessible to everyone. HTML is a standard language that is supported by all major web browsers. This means that your web pages will be accessible to everyone, regardless of the browser they are using.

HTML is a versatile language that can be used to create a variety of different web pages. HTML can be used to create a wide variety of web pages, from simple static pages to complex interactive applications.

If you are interested in creating your own web pages, or if you want to learn more about web development, then learning HTML is a great place to start. HTML is a powerful language that can be used to create a wide variety of web pages. With a little practice, you can learn how to create your own beautiful and functional web pages.

Here are some specific examples of how HTML is used:

To create the structure of a web page, such as the header, body, and footer. The <html> tag defines the beginning and end of an HTML document. The <head> tag contains information about the document, such as the title and the character encoding. The <body> tag contains the visible content of the document.

To add text, images, and other content to a web page. The <p> tag creates a paragraph. The <img> tag inserts an image into a web page. The <a> tag creates a link to another web page.

To create links to other web pages. The <a> tag creates a link to another web page. The href attribute of the <a> tag specifies the URL of the linked page.

To format the appearance of a web page, such as the font, size, and color of text. The <font> tag specifies the font, size, and color of text. The <style> tag is used to define custom CSS styles for a web page.

To create forms that users can fill out. The <form> tag creates a form. The input tag is used to create different types of form controls, such as text boxes, radio buttons, and check boxes.

To create tables that display data. The <table> tag creates a table. The <tr> tag creates a row in a table. The <td> tag creates a cell in a table.

These are just a few examples of how HTML is used. With a little practice, you can learn how to use HTML to create your own beautiful and functional web pages.

If You Wanna Learn HTML from scratch you can visit these site that i prefer:

e-Tuition

w3school

vedantu

coding ninjas

0 notes

Text

Unlock the potential of XML through our tutorial. Grasp the intricacies of markup, elements, and attributes. Learn to structure data logically, facilitating efficient sharing and integration across applications. Explore real-world examples, and elevate your understanding of XML's role in modern data-driven environments. Ideal for beginners and those seeking comprehensive insights.

0 notes